Over the years, Google seems to have set a pattern in how it interacts with the web. The search engine provides structured data formats and tools that allow us to provide information to Google. Think: meta tags, schema markup, the disallowance tool, and more.

Google then consumes and learns from this structured data deployed on the web. Once enough learnings are extracted, Google de-emphasizes or de-emphasizes these structured data formats, making them less impactful or obsolete.

This cyclical process of providing structured data capabilities, consuming the information, learning from it, and then removing or diminishing those capabilities appears to be a core part of Google’s strategy.

It allows the search engine to temporarily boost SEO and branding as a means to an end: to mine data to improve its algorithms and continually improve its understanding of the web.

This article explores this pattern of “give and take” through several examples.

Google’s give-and-take pattern

The pattern can be divided into four stages:

structure: Google offers structural ways to interact with search snippets or its ranking algorithms. For example, in the past, meta keywords could tell Google which keywords were relevant to a given web page.

Consume: Google collects web data by crawling websites. This step is important. Without consuming data from the web, Google has nothing to learn.

learn: Google takes advantage of the new crawl data, after implementing the recommended structures. What were the reactions to the tools or code snippets proposed by Google? Were these changes helpful or abused? Google can now confidently make changes to its ranking algorithms.

to retire: Once Google has learned what it can do, there is no reason to trust us to provide them with structured information. Leaving these incoming data pipes intact will invariably lead to abuse over time, so the search engine must learn to survive without them. Google’s suggested structure is deprecated in many (though not all) cases.

The race is for the search engine to learn from webmasters’ interactions with Google’s suggested structure before they can learn to manipulate it. Google usually wins this race.

It doesn’t mean that anyone can take advantage of new structural elements before Google discards them. It simply means that Google tends to discard such articles before the illegitimate manipulations become widespread.

Give and give examples

1. Metadata

In the past, meta keywords and meta descriptions played a crucial role within Google’s ranking algorithms. The initial support for meta keywords within search engines predates the founding of Google in 1998.

Implementing meta keywords was a way for a web page to tell a search engine the terms for which the page should be found. However, such a straightforward and useful piece of code was quickly abused.

Many webmasters injected thousands of keywords per page in the interest of getting more search traffic than was fair. It quickly led to the rise of low-quality websites full of ads that unfairly converted purchased traffic into advertising revenue.

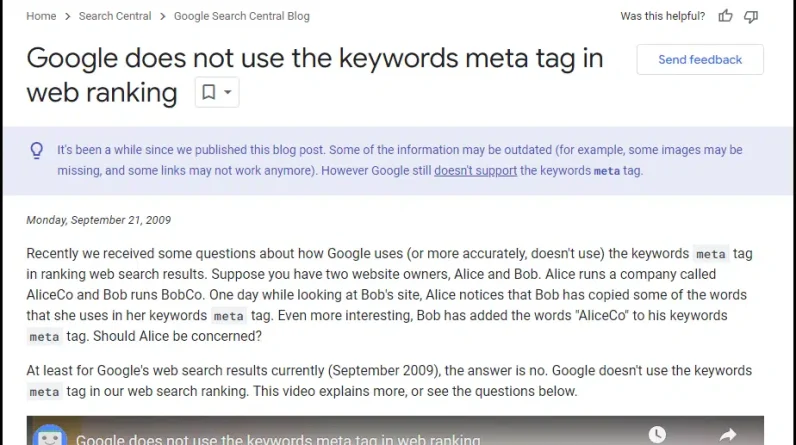

In 2009, Google has confirmed what many had suspected for years. Google stated:

“At least for Google web search results currently (September 2009), the answer is no. Google does not use the keyword meta tag in our web search ranking.”

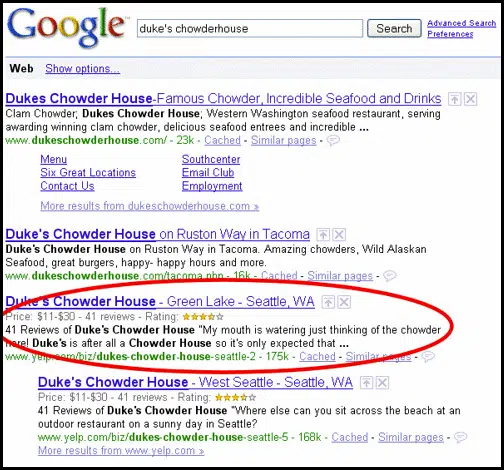

Another example is the meta description, a piece of code that Google has supported since its inception. Meta descriptions were used as snippet text under a link in Google search results.

As Google got better, it started to ignore meta descriptions in certain situations. This is because users can discover a web page using several Google keywords.

If a web page talks about several topics and a user searches for a term related to topic 3, it would not be useful to show a snippet with a description of topics 1 or 2.

So Google started rewriting search snippets based on the user’s search intent, sometimes ignoring a page’s static meta description.

In recent times, Google has shortened search snippets and even confirmed that they primarily look at the main content of a page when generating descriptive snippets.

2. Schema and structured data

Google introduced schema support (a form of structured data) in 2009.

It initially pushed the “microformats” style of outline, where individual elements had to be marked up within HTML to feed structured or contextual information to Google.

In terms of concept, this is actually not too far from the thinking behind HTML meta tags. Surprisingly, a new encoding syntax was adopted instead of using meta tags more widely.

For example, the idea of schema tagging was initially (and largely continues to be) to provide additional contextual information about data or code that has already been deployed, which is similar to metadata definition:

“Information that describes other information to help you understand or use it.”

Both schema and metadata attempt to achieve this same goal. Information that describes other existing information to help the user take advantage of that information. However, the detail and structural hierarchy of the schema (at the end) made it much more scalable and effective.

Today, Google still uses the schema for context awareness and detail about various web entities (eg, web pages, organizations, reviews, videos, products; the list goes on).

That said, Google initially allowed the scheme to modify images in a page’s search listings with a large degree of control. You can easily add star ratings to your pages for Google search results, making them stand out (visually) from competing web results.

As usual, some began abusing these powers to outperform less SEO-conscious competitors.

In February 2014, Google started talking about penalties for spamming rich snippets. This was when people misused the scheme to make their search results look better than others, even though the information behind them was incorrect. For example, a site with no reviews assumes an aggregate review score of 5 stars (clearly false).

Fast forward to 2024, and while still useful for the situation, the scheme isn’t as powerful as it once was. Delivery is made easier by Google’s JSON-LD preference. However, the scheme no longer has absolute power to control the images in a search list.

Get the daily search newsletter marketers trust.

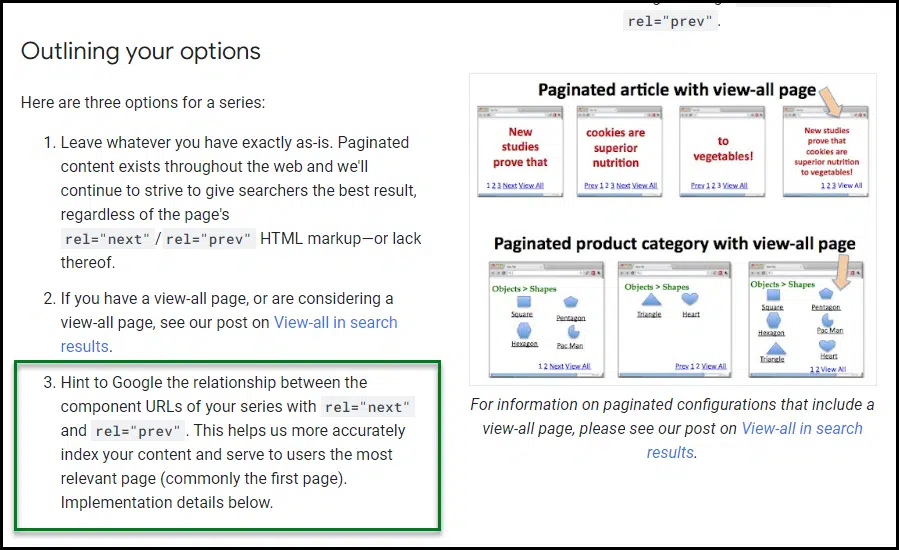

3. Rel=Previous / Next

Rel=”prev” and rel=”next” were two HTML attributes from Google proposed in 2011. The idea was to help Google develop a more contextual awareness of how certain types of paged addresses were interrelated:

Eight years later, Google announced it was no longer supporting it. They also said that they haven’t supported this type of encoding for a long time, suggesting that support ended around 2016, just five years after the suggestions were first made.

Many were understandably upset that the tags were difficult to implement, often requiring actual web developers to recode aspects of website themes.

Increasingly, it seemed like Google would suggest complex code changes one moment only to abandon them the next. In reality, it’s likely that Google simply learned all it needed to from the rel=prev / next experiment.

4. Deauthorization tool

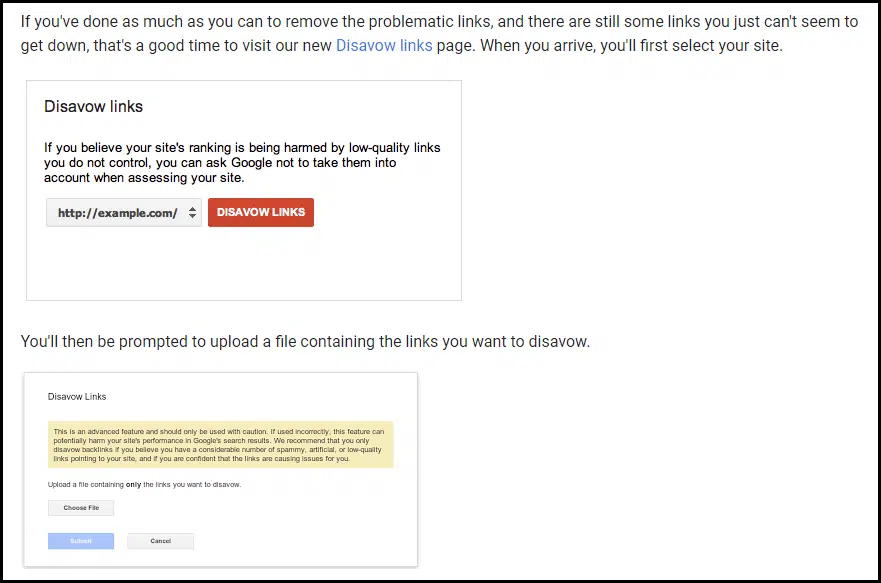

In October 2012, the web emerged with news of what’s new from Google Deny links tool

In April 2012, Google released the Penguin update, which sent the web into a state of confusion. The update targeted a lot of off-site spam (link building) activity, and many websites saw manual action notices appear in Search Console (then called Webmaster Tools).

With the Desavow tool, you can upload lists of pages or linking domains that you would like to exclude from Google’s ranking algorithms. If these uploaded links closely matched Google’s internal backlink profile assessment, the active manual penalty may have been lifted.

This would return a “fair” amount of Google traffic to your site, although obviously with some of your backlink profile now “disapproved” – post-penalty traffic is usually lower than pre-penalty traffic .

As such, the SEO community had a relatively low opinion of the tool. A full backlink removal or disallowance project was usually required. Having less traffic after the penalty was better than no traffic at all.

Deauthorization projects have not been necessary for years. Google now says that anyone who still offers this service is using outdated practices.

In recent years, Google’s John Mueller has been very critical of those who sell “disclaimer” or “toxic link” work. It seems as if Google no longer wants us to use this tool; they certainly don’t advise us on its use (and haven’t for many years).

Dig deeper. Toxic Links and Disavows: A Complete Guide to SEO

Developing Google’s give-and-take relationship with the web

Google provides tools or code snippets for SEOs to manipulate their search results in minor ways. Once Google gets information from these deployments, these features are often phased out. Google grants us a limited amount of temporary control to facilitate long-term learning and adaptation.

Does that make these little temporary Google edits useless? There are two ways to look at this:

Some people will say, “Don’t jump on the bandwagon! These temporary deployments are not worth the effort.” Others will say, “Google is giving us temporary opportunities for control, so we need to take advantage of them before they’re gone.”

In fact, there is no right or wrong answer. It depends on your ability to adapt to web changes efficiently.

If you’re comfortable with quick changes, implement what you can and react quickly. If your organization doesn’t have the experience or resources to make rapid changes, it doesn’t pay to follow trends blindly.

I think this ebb and flow of give and take doesn’t necessarily make Google bad or evil. Any company will leverage its unique assets to drive learning and business activity.

In this case, we are one of Google’s assets. It depends on whether you want this relationship (between you and Google) to continue.

You may choose not to cooperate with Google’s temporary power and long-term learning business agreements. However, this can put you at a competitive disadvantage.

The views expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.

[ad_2]

Source link