A leaked Google memo offers a point-by-point summary of why Google is losing out to open source AI and suggests a path back to domain and ownership of the platform.

The note opens by acknowledging that its competitor was never OpenAI and would always be open source.

You can’t compete with open source

Furthermore, they admit that they are in no way positioned to compete against open source, acknowledging that they have already lost the battle for AI dominance.

They wrote:

“We’ve done a lot of looking over our shoulders at OpenAI. Who’s going to cross the next milestone? What’s the next move?

But the uncomfortable truth is that we are not positioned to win this arms race and neither is OpenAI. While we fight, a third faction has been quietly eating our lunch.

I’m talking, of course, about open source.

Quite simply, they are beating us. The things that we consider ‘important open problems’ are solved and in people’s hands today.”

Most of the note is devoted to describing how Google is being overtaken by open source.

And while Google has a slight edge over open source, the memo’s author acknowledges that it’s slipping away and never coming back.

The self-analysis of the metaphorical cards they have dealt themselves is considerably poor:

“Although our models still have a slight edge in terms of quality, the gap is closing at an astonishing rate.

Open source models are faster, more customizable, more private, and more capable pound for pound.

They’re doing things with parameters of $100 million and $13 million that we struggle with at $10 million and $540 million.

And they do it in weeks, not months.”

Large language model size is not an advantage

Perhaps the most chilling realization expressed in the memo is that Google’s size is no longer an advantage.

The extraordinarily large size of their models is now seen as a disadvantage and by no means the insurmountable advantage they were thought to be.

The leaked memo lists a series of events that indicate that Google’s (and OpenAI’s) control of AI may quickly end.

It is reported that just a month ago, in March 2023, the open source community obtained a leaked open source large format language model developed by Meta called LLaMA.

Within days and weeks, the global open source community developed all the building blocks needed to create clones of Bard and ChatGPT.

Sophisticated steps such as instruction tuning and reinforcement learning from human feedback (RLHF) were quickly replicated by the global open source community, no less cheaply.

Tuning instructions

A process of tuning a language model to make it do something specific that it was not originally trained to do.

Reinforcement Learning from Human Feedback (RLHF)

A technique in which humans evaluate a language shapes the output so that it learns what results are satisfactory to humans.

RLHF is the technique used by OpenAI to create InstructGPT, which is a model underlying ChatGPT and allows GPT-3.5 and GPT-4 models to receive instructions and complete tasks.

RLHF is open source on fire

The scale of open source scares Google

What scares Google in particular is the fact that the Open Source movement is able to scale its projects in a way that closed source cannot.

The Q&A dataset used to create the open source ChatGPT clone, Dolly 2.0, was created entirely by thousands of employee volunteers.

Google and OpenAI were partially based on questions and answers from sites like Reddit.

The open source Q&A dataset created by Databricks is said to be of higher quality because the humans who contributed to creating it were professionals and the answers they provided were longer and more substantial than those found in a typical dataset of questions and answers taken from a public forum.

The leaked memo noted:

“In early March, the open source community got its hands on its first truly capable foundation model, as Meta’s LLaMA was leaked to the public.

It had no instructions or talk tuning, no RLHF.

However, the community immediately understood the importance of what had been given to them.

A huge outpouring of innovation followed, with only days between major developments…

Here we are, barely a month later, and there are variants of tweaking instructions, quantification, quality improvements, human assessments, multimodality, RLHF, etc. etc., many of which are based on each other.

Most importantly, they’ve solved the problem of scaling to the extent that anyone can play.

Many of the new ideas come from ordinary people.

The barrier to entry for training and experimentation has dropped from the full output of a major research organization to one person, one night, and a rugged laptop.”

In other words, what took months and years for Google and OpenAI to train and build took only days for the open source community.

This must be a truly terrifying scenario for Google.

It’s one of the reasons I’ve been writing so much about the open source AI movement, because it really seems like where the future of generative AI is going to be in a relatively short period of time.

Open source has historically outperformed closed source

The note cites recent experience with OpenAI’s DALL-E, the deep learning model used to create images, compared to the open source Stable Diffusion as a harbinger of what is currently happening in generative AI such as Bard and ChatGPT.

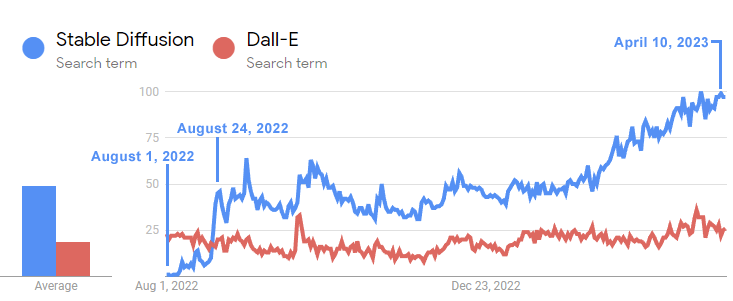

Dall-e was released by OpenAI in January 2021. Stable Diffusion, the open source version, was released a year and a half later in August 2022 and within weeks it surpassed Dall-E in popularity.

This timeline chart shows how quickly Stable Diffusion overtook Dall-E:

The Google Trends timeline above shows how interest in the open-source Stable Diffusion model far exceeded that of Dall-E within three weeks of its release.

And even though Dall-E had been out for a year and a half, interest in Stable Diffusion continued to grow exponentially while OpenAI’s Dall-E remained stagnant.

The existential threat of similar events overtaking Bard (and OpenAI) is giving Google nightmares.

The open source model creation process is superior

Another factor alarming Google’s engineers is that the process of creating and improving open source models is fast, inexpensive, and lends itself perfectly to a global collaborative approach common to open source projects.

The note observes that new techniques such as LoRA (Low-Rank Adaptation of Large Language Models), allow language models to be adjusted in a matter of days at an excessively low cost, with the final LLM comparable to the excessively expensive LLMs. created by Google and OpenAI.

Another advantage is that open source engineers can build on previous work, iterate, rather than having to start from scratch.

Today it is not necessary to build large language models with billions of parameters in the way that OpenAI and Google have been doing.

This may be the point that Sam Alton hinted at recently when he recently said that the era of large, massive language models is over.

The author of the Google memo contrasted LoRA’s cheap and fast approach to building LLMs with today’s big AI approach.

The author of the note reflects on the lack of Google:

“In contrast, training giant models from scratch not only throws away the prior training, but also any iterative improvements that have been made on top of it. In the open source world, it doesn’t take long to master these improvements, which makes retraining complete is extremely expensive.

We should think about whether every new application or idea really needs a completely new model.

… In fact, in terms of engineer hours, the rate of improvement of these models far exceeds what we can do with our larger variants, and the best ones are already indistinguishable from ChatGPT.

The author concludes by realizing that what they thought was their advantage, their giant models and concomitant prohibitive cost, was actually a disadvantage.

The global collaborative nature of open source is more efficient and orders of magnitude faster in innovation.

How can a closed source system compete against the overwhelming crowd of engineers from around the world?

The author concludes that they cannot compete and that direct competition is, in his words, a “losing proposition”.

This is the crisis, the storm, unfolding outside Google.

If you can’t beat open source, join it

The only consolation the memo’s author finds in open source is that since open source innovations are free, Google can also take advantage of them.

Finally, the author concludes that the only open approach to Google is to own the platform in the same way that Chrome and Android dominate the open source platforms.

They point out how Meta is benefiting from releasing their great LLaMA language model for research and how they now have thousands of people doing their work for free.

Perhaps the big takeaway from the note is that in the near future Google may try to replicate its open source dominance by launching its own open source projects and thereby owning the platform.

The memo concludes that using open source is the most viable option:

“Google should establish itself as a leader in the open source community, taking the lead by cooperating with, rather than ignoring, the broader conversation.

This probably means taking some awkward steps, such as publishing the model weights for small ULM variants. This necessarily means giving up some control over our models.

But this commitment is inevitable.

We can’t expect to drive innovation as much as control it.”

Open source walks away with AI fire

Last week I alluded to the Greek myth of the human hero Prometheus stealing fire from the gods on Olympus, pitting the open source Prometheus against the “Olympic gods” of Google and OpenAI:

me he tweeted:

“While Google, Microsoft and Open AI fight each other and have each other’s backs, is Open Source walking away with its fire?”

The leak of the Google memo confirms this observation, but also points to a possible shift in strategy at Google to join the open source movement and thereby co-opt and dominate it in the same way that it they did with Chrome and Android.

Read the leaked Google memo here:

Google “We don’t have a moat, and neither does OpenAI”

[ad_2]

Source link