OpenAI has successfully met the requirements of the Italian Garante, lifting Italy’s ban on ChatGPT for almost a month. The company made several improvements to its services, including clarifying the use of personal data, to comply with European data protection legislation.

Resolution of this issue comes as the European Union moves closer to enacting the Artificial Intelligence Act, which aims to regulate AI technology and may affect generative AI tools in the future.

OpenAI meets warranty requirements

According to statement of the Italian Guarantor, OpenAI resolved issues with the Guarantor, ending the nearly month-long ban on ChatGPT in Italy. The Guarantor he tweeted:

“#GarantePrivacy recognizes the steps taken by #OpenAI to reconcile technological advances with respect for people’s rights and expects the company to continue its efforts to comply with European data protection legislation.”

Comply with the Guarantor’s requestOpenAI did the next:

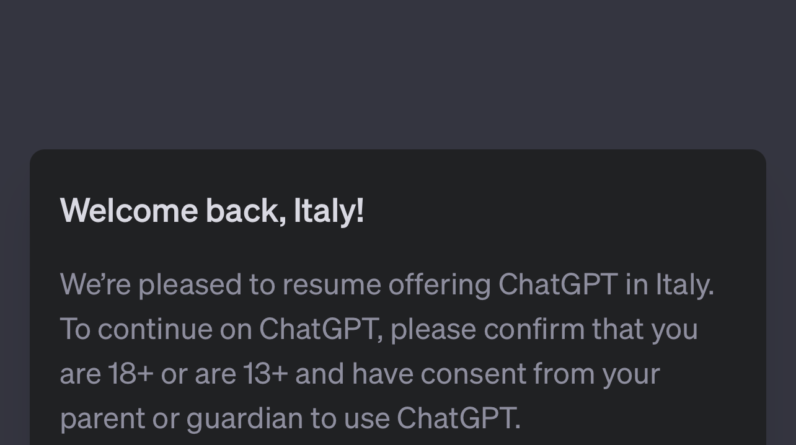

Screenshot from ChatGPT, April 2023

Although OpenAI resolved this complaint, it is not the only legislative hurdle facing AI companies in the EU.

AI Act is close to becoming law

Before winning ChatGPT 100 million users in two months, the European Commission proposed the EU Artificial Intelligence Law as a way to regulate the development of AI.

This week, almost two years later, members of the European Parliament as reported agreed to move the EU AI Act to the next stage of the legislative process. Lawmakers could work on the details before starting to vote in the next two months.

The Future of Life Institute publishes a fortnightly newsletter covering the latest developments in the EU AI Law and press coverage.

A recent open letter across all FLI AI labs to stop AI development for six months received over 27,000 signatures. Notable names supporting the pause include Elon Musk, Steve Wozniak and Yoshua Bengio.

How might generative AI affect the act of AI?

Under the EU AI Law, AI technology would be classified by level of risk. Tools that could impact security and human rights, such as biometric technology, should meet stricter regulations and government oversight.

Generative AI tools should also disclose the use of copyrighted material in the training data. Given the pending lawsuits over open source code and copyrighted art used in training data by GitHub Copilot, StableDiffision and others, this would be a particularly interesting development.

As with most new legislation, AI companies will incur compliance costs to ensure tools meet regulatory requirements. Larger companies will be able to absorb the additional costs or pass them on to users than smaller companies, which could lead to less innovation by underfunded entrepreneurs and startups.

Featured Image: 3rdtimeluckystudio/Shutterstock

[ad_2]

Source link