Iterative testing is crucial to the ongoing success of any PPC campaign.

As we inevitably rely more of this testing process on the ad platform’s AI, getting insights into what has worked becomes more complicated.

For example, responsive search ads can consist of up to 15 titles and four descriptions in a virtually endless variety of formats. We receive limited data on top-performing combinations and assets, but building actionable insights for future ad tactics can be difficult without a clear plan.

Fortunately, Google’s ad variations feature allows you to modify ads in a campaign or across campaigns. This ensures that a net subset of people get one version and another subset the next.

This capability allows you to test different offers, messaging types, asset orders and more. The value of using ad variations over standard Google Ads experiments lies in the ease of setup and the ability to test across multiple campaigns.

We start with an overview of how to set up ad variations and move on to practical ideas for using them in your accounts.

Setting up an ad variation

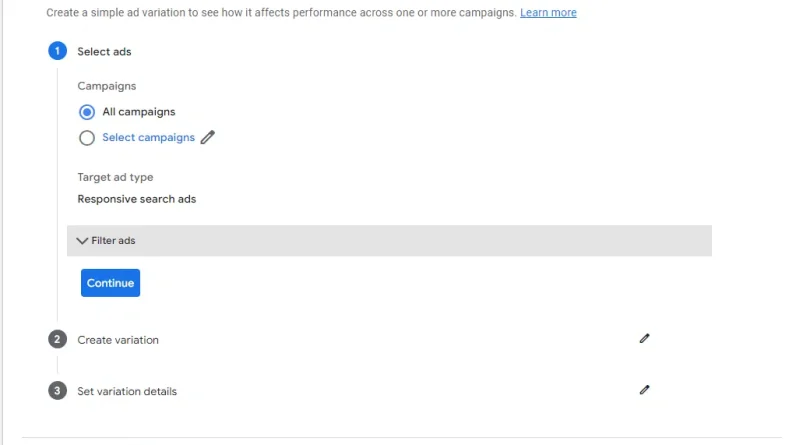

To get started, go to Campaigns > Experiments in the left menu and select Ad Variations on the page that appears.

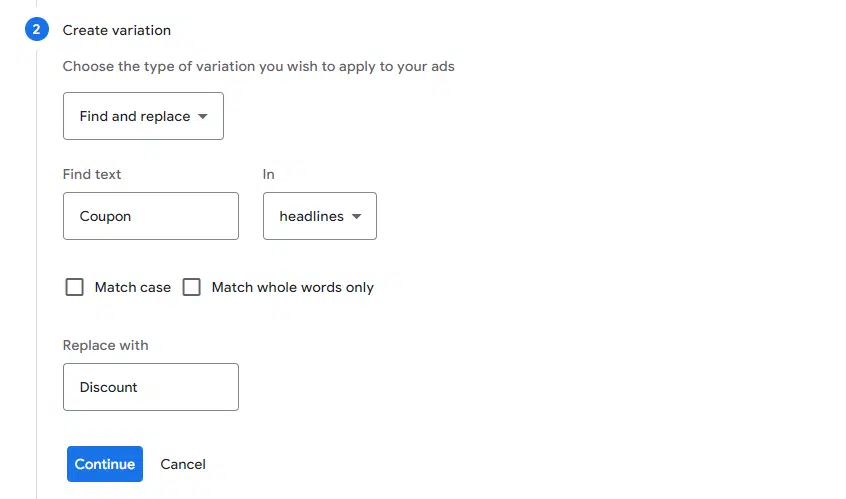

You can choose between three types of variations:

find and replace Update the URLs (either the final URL or the display path or both). Update text, including adding or removing titles or descriptions, as well as pinning or unpinning resources.

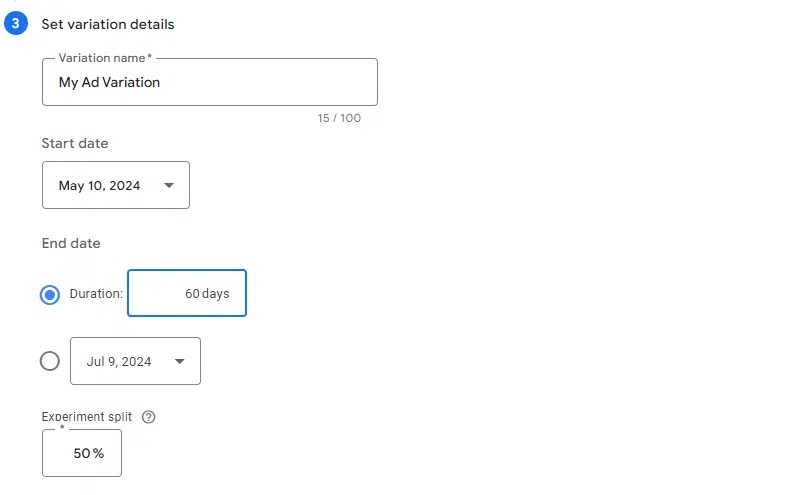

The final step is to set up the details of your variation. Here you can enter a name, define a start date and an end date (duration must be 84 days or less), and determine the split of the experiment.

Note that the experiment split defines the percentage of the budget that is allocated to the variation and, in turn, the percentage of auctions that the variation can enter. However, if you have a 50% split, this does not mean that the impressions will be split exactly in half between the control and the variation, as one version may receive a higher impression share.

According to Google’s documentation, users are assigned a cookie-based variant and will continue to see only one version of the ad. Please note that factors such as private browsing, blocking third-party cookies, etc. may affect accuracy here.

Release of ad variation

Be sure to check your settings carefully, as you can’t change your ad variation once it’s launched (other than pausing or applying it). When you’re sure, click Create Variation to finish the process.

The variance will be published once you’ve gone through Google’s review process and reached your start date. Note that while you can technically launch a variation that starts the same day you created it, it’s probably best to schedule it to start the next day or later for cleaner data.

Get the daily search newsletter marketers trust.

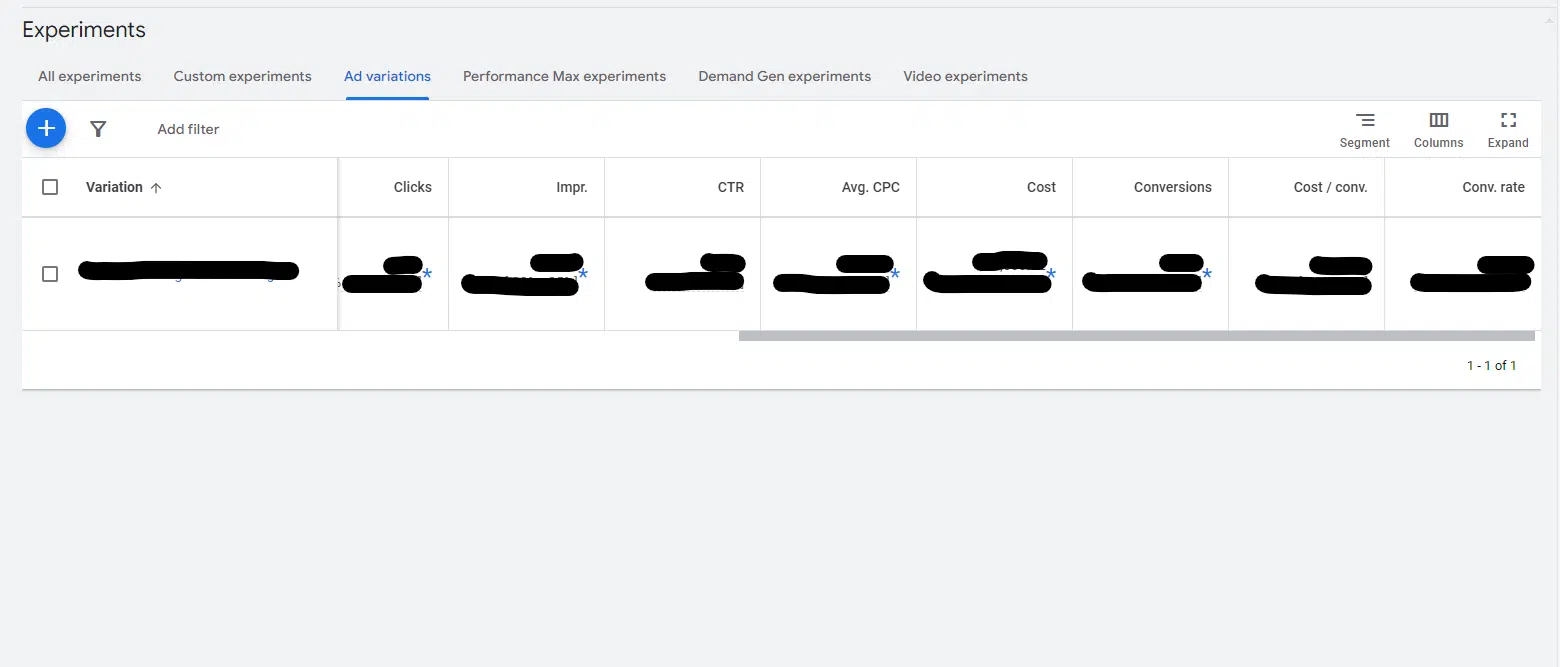

View performance data

Once they start accumulating data, you’ll see the numbers appear in the performance table. You can add columns for specific metrics you want to include here.

Once the difference in performance between the control and test versions becomes statistically significant, these percentages and the confidence interval will appear.

Additionally, you can click the variation name to see top-level metrics and ad-level metrics comparing original and modified ads.

What metrics should you focus on?

Ideally, you have conversion tracking set up correctly in your account (if not, you should resolve this before setting up a test) and you should be looking at conversion rate and cost/conversion across variations. If you have values associated with conversions, include ROAS as well.

Ultimately, your testing should focus on generating more sales or leads at a more efficient cost. However, you can also consider CTR as an indicator of how relevant the ad is to the user (although be aware of the caveat that in some cases ad adjustments may involve users being excluded unwanted, the decrease in CTR).

Based on the data you see, you can decide whether you want to apply the changes you’ve made to the variation, or you can make the updates manually. After the test is complete, you can go back and review it along with any other historical test in this section.

Ad variation ideas

Now that we’ve discussed the process of starting an ad variation test and reviewing the data, let’s conclude with some practical tips for using this feature in your account.

Test the wording changes in your ad copy. For example, exchange “coupon” for “offer” to see which generates a better response or try different CTAs (“call” vs. “Online program”). Test placement-specific ad copy versus more generic ad copy. Instead of updating all of your ads for a specific sales period, run a test that includes seasonal and generic messages to see what impact your seasonal copy really has compared to your copy “tried and true”. Thinking of renaming a product or service? Try the name change on part of your ads to see how people respond. Fix the headlines in different orders. For example, you can compare performance when you mention an offer from”Save 25%” in the first title position and in the second. Check if pinning assets works better than not pinning them. Try removing titles and descriptions to see how it performs against the maximum number of assets. A/B test your landing pages using the option “Update URLs” to insert the URL of another page variant. Test display path changes to see the effect on user response, or to see if including a display path affects performance. For example, does tailoring the display path to the keyword/ad group theme work better than highlighting an offer in the display path (example.com/widgettype vs. example.com/save$100)? How much attention do people pay to descriptions in the SERP? Test how much changing ad descriptions affects performance when everything else is equal.

Ultimately, you’ll want to think about your accounts’ business goals and identify evidence that connects to those goals.

Consider a plan to implement the results of a test you conduct, along with additional follow-up tests.

The opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.

[ad_2]

Source link