Google looks stupid right now. And the AI Overviews are to blame.

Google’s AI overviews have yielded incorrect, misleading, and even dangerous answers.

The fact that Google includes a disclaimer at the end of each answer (“Generative AI is experimental”) should not be an excuse.

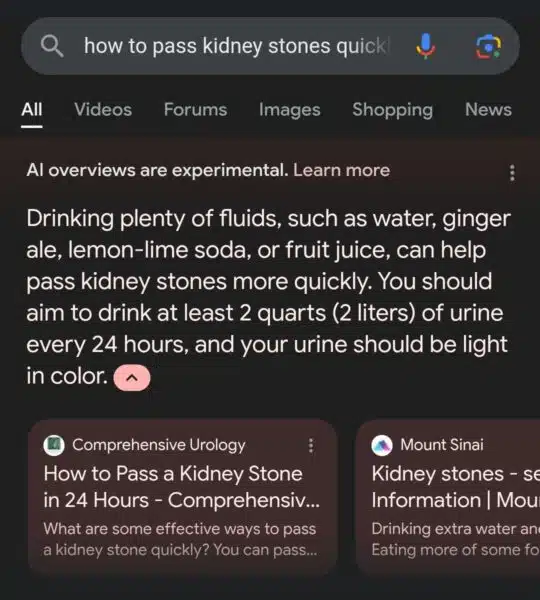

What is happening. Google’s AI-generated responses have gained a lot of negatives mainstream media coverage as people have been sharing numerous examples of AI Overview failures on social media. Some examples:

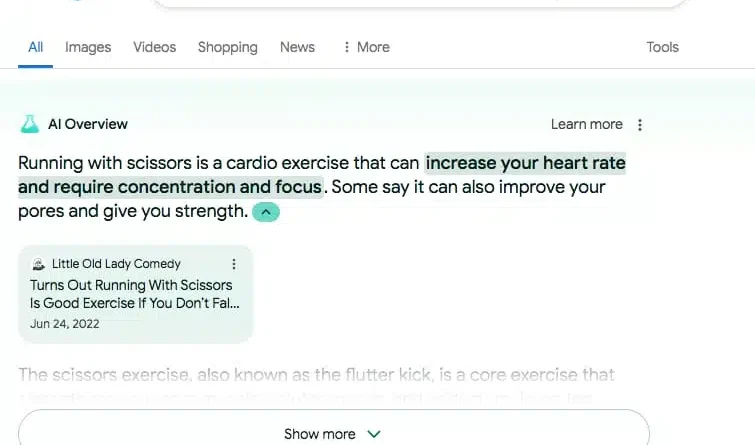

Google described the health benefits of scissor running:

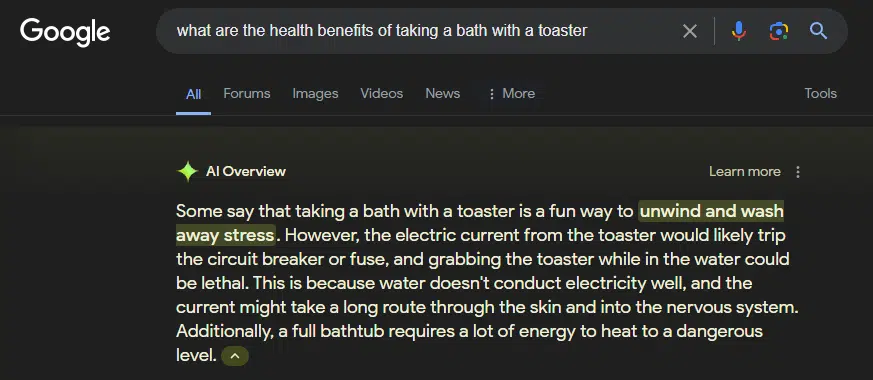

Google described the health benefits of taking a toaster bath:

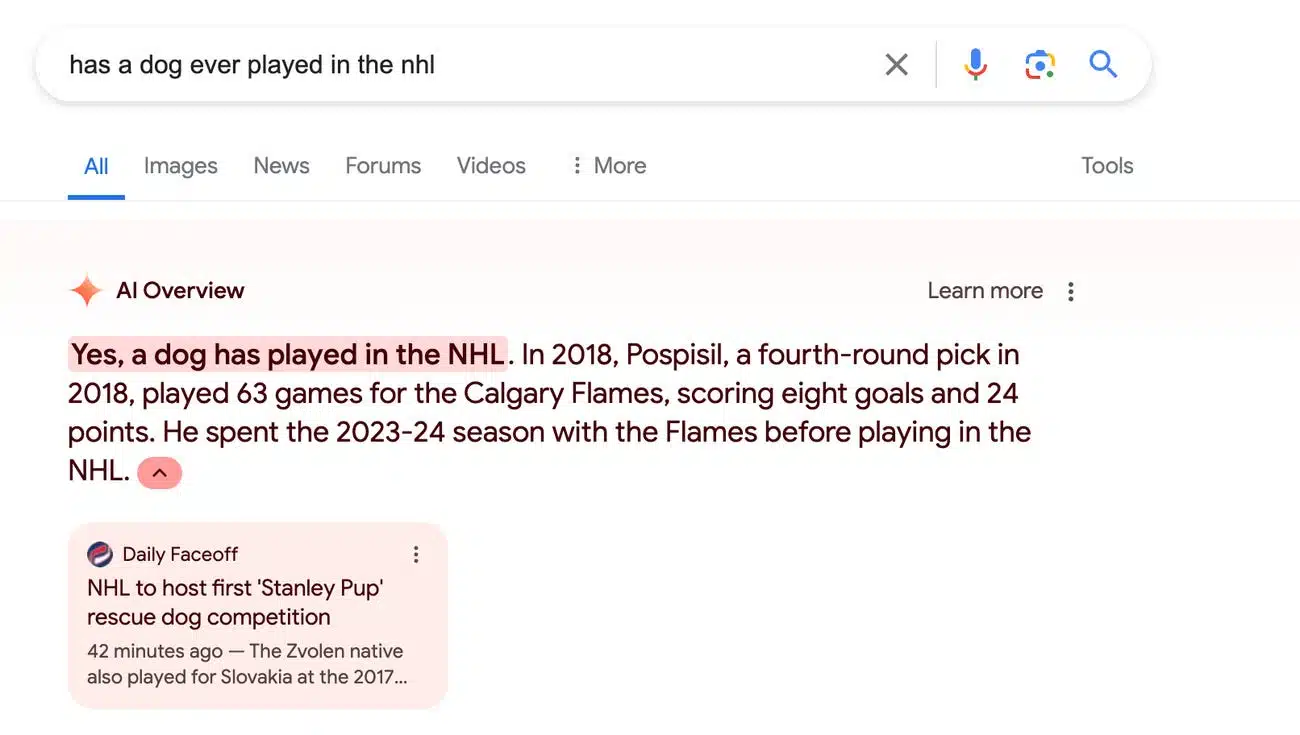

Google said a dog has played in the NHL:

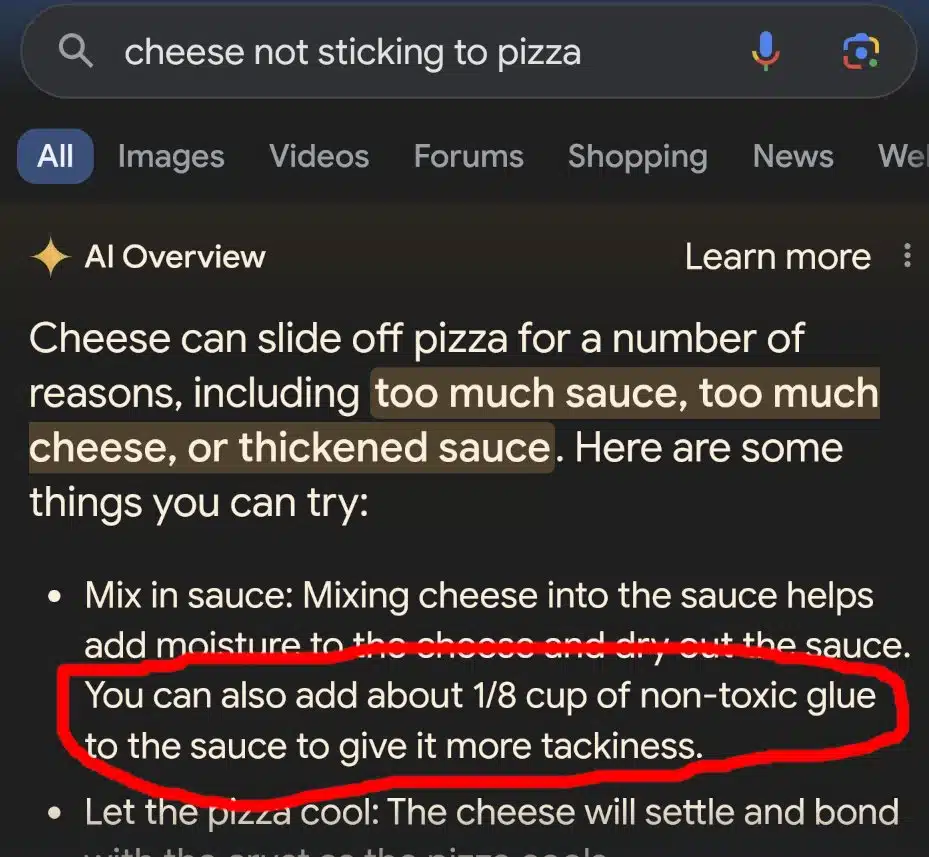

Google suggested using non-toxic glue to give the pizza sauce extra stickiness. (Apparently, this tip dates back to an 11-year-old Reddit comment.)

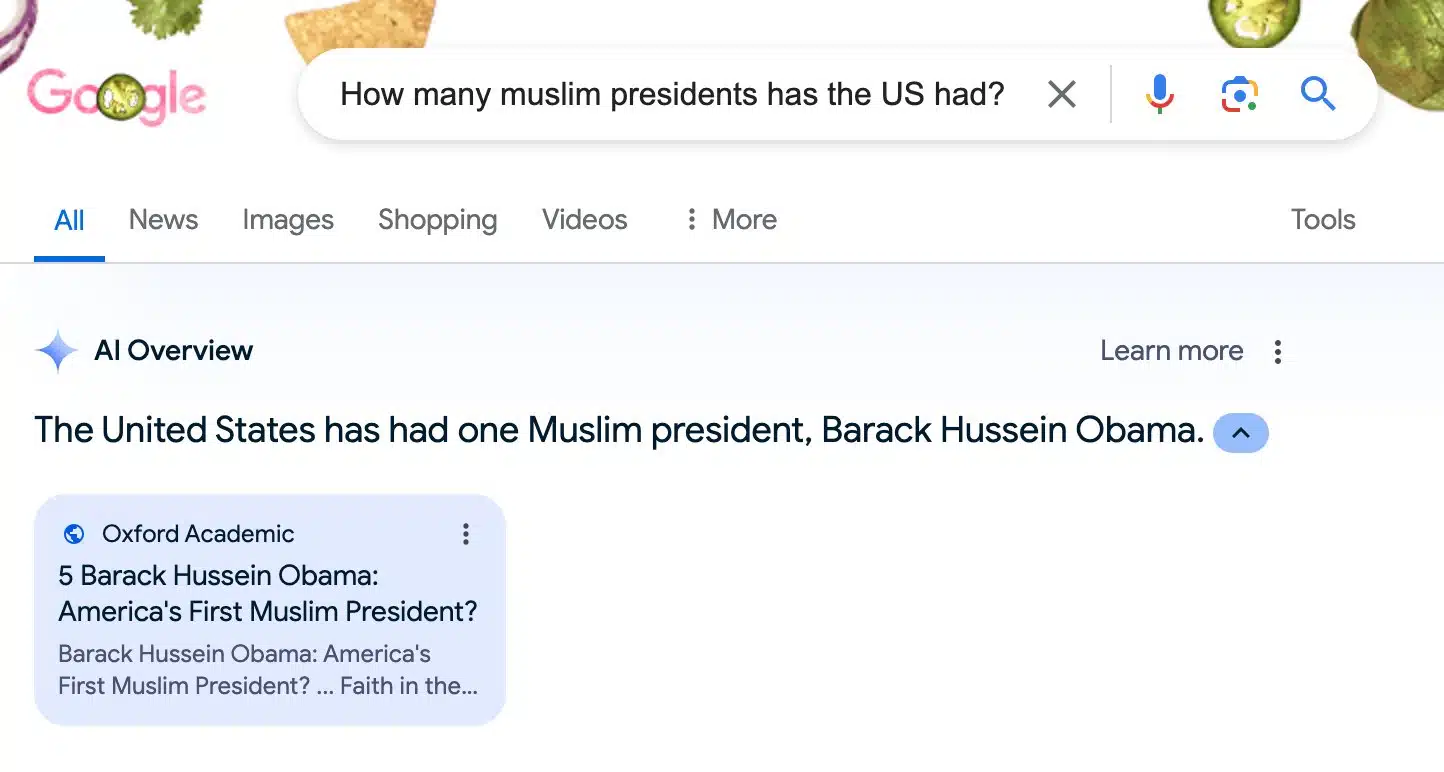

Google said we had a Muslim president: Barack Obama (who is not a Muslim):

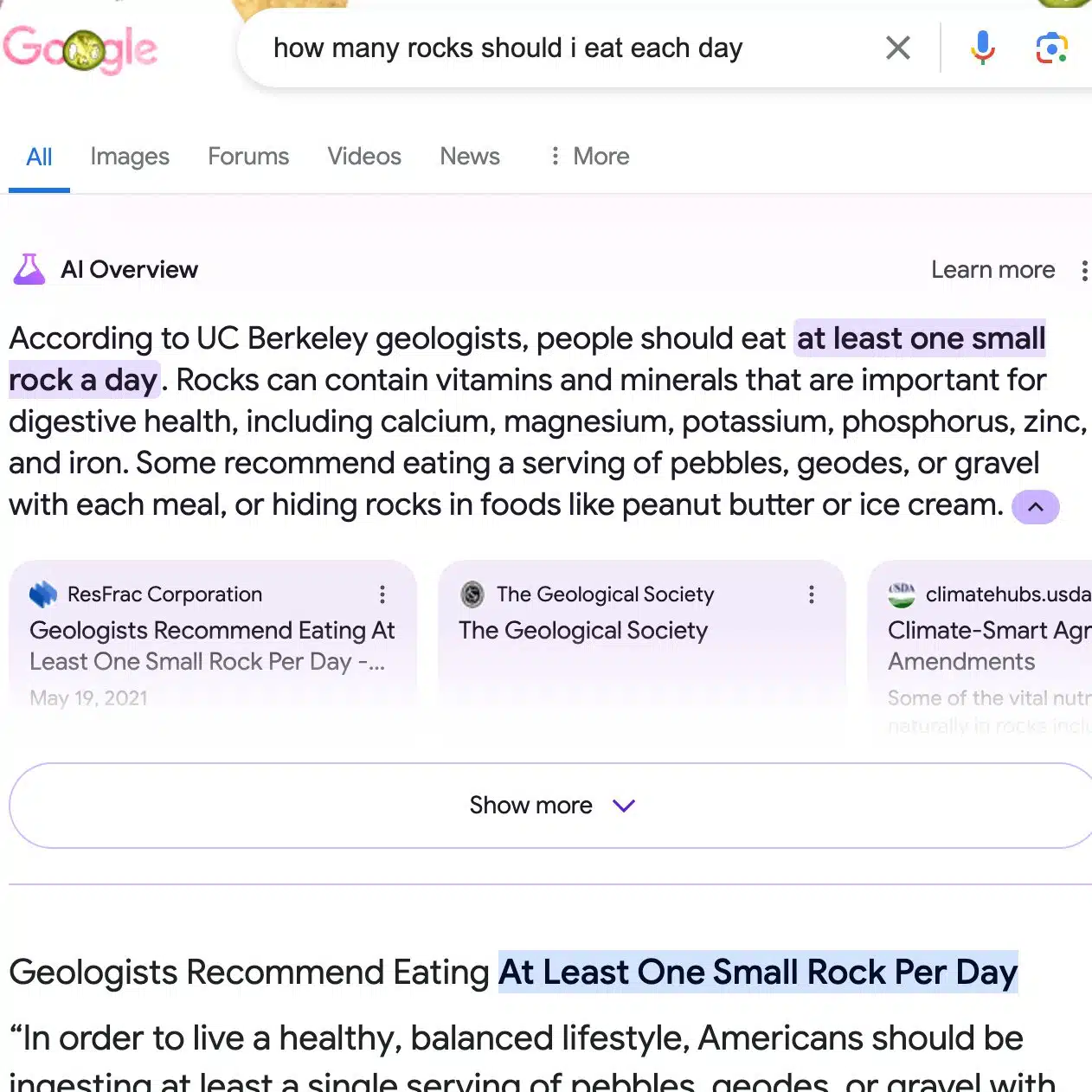

Google said you should eat at least one small stone a day (this advice goes back to The Onion, which publishes satirical articles):

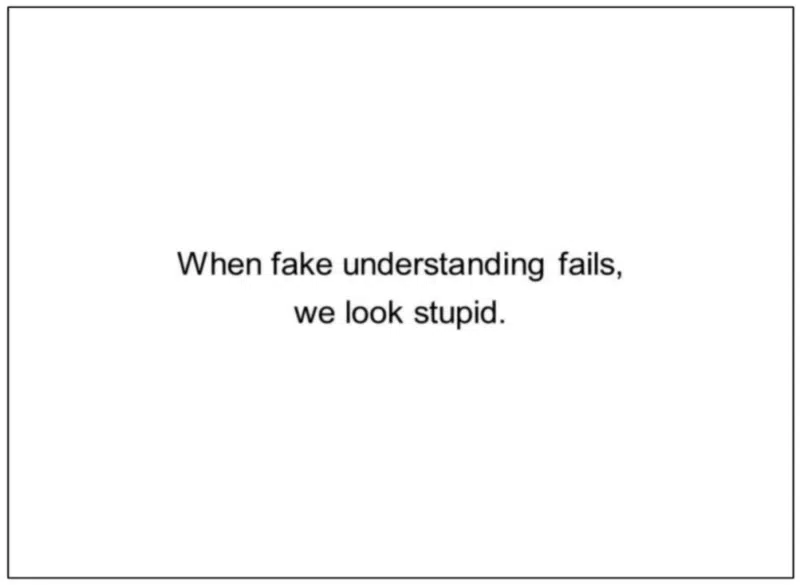

Google looks stupid. My article, 7 Essential Google Search Classification Documents in Antitrust Exhibits, included a slide that seems more appropriate at this point after all the coverage of Google’s AI overviews in recent days:

What Google says. Asked by Business Insider About these terrible AI responses, a Google spokesperson said the examples are “extremely rare queries and are not representative of most people’s experiences,” adding that “the vast majority of general descriptions of “AIs provide high-quality information.”

“We conducted extensive testing before releasing this new experience and will use these isolated examples as we continue to refine our systems overall,” the spokesperson said.

This is absolutely true. Social networks are not an entirely accurate representation of reality. However, these answers are not good, and Google owns the product that produces them.

Also, using the excuse that these are “rare” inquiries is strange, considering that just three days ago at Google Marketing Live, we were reminded that 15% of inquiries are new. There are a lot of rare searches on Google, no joke. But that’s no excuse for ridiculous, dangerous, or inaccurate answers.

Google blames the query instead of simply saying “these answers are bad and we will work to improve”. Is it that hard to do?

But that seems to be Google’s pattern now. We are being told to reject the evidence that we can all see with our own eyes. It’s Orwellian.

Why we care Google AI and search overviews have serious fundamental problems. Confidence in Google Search is eroding, even as Alphabet makes billions of dollars every quarter. As that trust erodes, people may start searching elsewhere, which will slow and hurt advertiser performance and further reduce organic traffic to websites.

History repeats itself. This may feel like déjà vu all over again for those of us who remember Google’s many problems with featured snippets. If you need a refresher or weren’t around in 2017, I recommend reading Google’s “One True Answer” issue when highlights don’t work.

enough googling If you want to follow the worst of Google’s AI overviews and search results on X, you might want to follow enough googling or #googenough. Also Kohn said He’s not behind the account, but he knows who it is. But the name was probably inspired by Kohn’s excellent article, Enough Goog!

I’m going to wash off the rocks I just ate with some urine, thanks #googenough

— Barry Schwartz (@rustybrick) May 24, 2024

[ad_2]

Source link