The robots.txt file for Google’s John Mueller’s personal blog became the focus of interest when someone on Reddit claimed that Mueller’s blog had been affected by the helpful content system and subsequently de-indexed. The truth turned out to be less dramatic than that, but it was still a little weird.

SEO subreddit post

John Mueller’s robots.txt saga began when a Redditor published that John Mueller’s website was de-indexed, and he posted that it conflicted with Google’s algorithm. But ironically, that was never going to be the case, as it only took a few seconds to load the website’s robots.txt to see that something strange was going on.

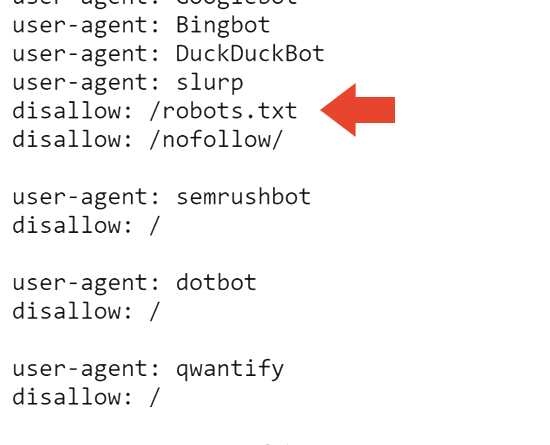

Here’s the top of Mueller’s robots.txt with a commented easter egg for those who take a look.

The first bit that you don’t see every day is a ban in robots.txt. Who uses their robots.txt to tell Google not to crawl their robots.txt?

Now we know.

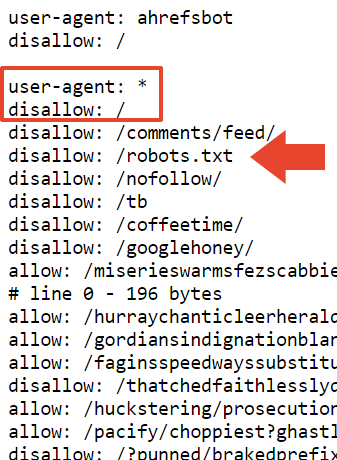

The next part of the robots.txt file blocks all search engines from crawling the website and robots.txt.

This probably explains why the site is de-indexed in Google. But it doesn’t explain why it’s still indexed by Bing.

I asked Adam Humphreys, a web developer and SEO (LinkedIn profile), suggested that Bingbot might not have gone through Mueller’s site because it’s a largely inactive website.

Adam messaged me with his thoughts:

“User-Agent: *

Disallow: /topsy/

Disallow: /crets/

Disallow: /hidden/file.html

In these examples, the folders and the file in that folder would not be found.

It’s saying that you don’t allow the robots file that Bing ignores but Google hears.

Bing would ignore incorrectly implemented bots because many don’t know how to do this. “

Adam also suggested that perhaps Bing ignored the robots.txt file altogether.

He explained it to me this way:

“Yes or choose to ignore a directive to not read an instruction file.

Improperly implemented bot prompts in Bing are likely to be ignored. This is the most logical answer for them. It’s a hint file.”

Robots.txt was last updated between July and November 2023, so Bingbot may not have seen the latest robots.txt. This makes sense because Microsoft’s IndexNow web crawling system prioritizes efficient crawling.

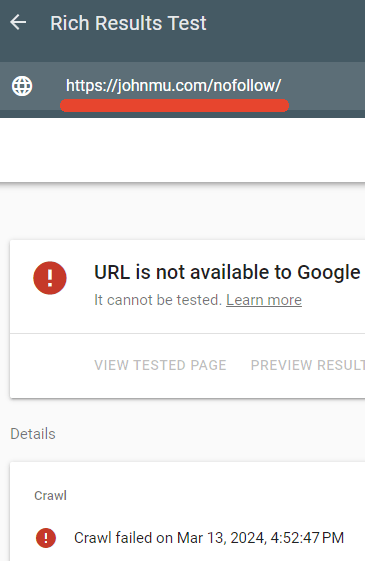

One of the directories blocked by Mueller’s robots.txt is /nofollow/ (which is a strange name for a folder).

There is basically nothing on this page except some site navigation and the word, Redirector.

I tested if the robots.txt was blocking this page and it was.

Google’s rich results checker could not crawl the web page /nofollow/.

Explained by John Mueller

Mueller seemed amused that so much attention was paid to his robots.txt and posted an explanation on LinkedIn of what was happening

He wrote:

“But what about the file? And why is your site deindexed?

Someone suggested it might be because of the Google+ links. Is possible. And back to the robots.txt… it’s fine; i mean, it’s how i want it, and trackers can deal with it. Or they should be able to, if they follow RFC9309.”

He then said that the robots.txt nofollow was simply to prevent it from being indexed as an HTML file.

Explained:

“”disallow: /robots.txt” – Does this make the robots go in circles? Does this de-index your site? Nope.

My robots.txt file just has a lot of stuff, and it’s cleaner if it’s not indexed with its content. This only prevents the robots.txt file from being crawled for indexing purposes.

I could also use the x-robots-tag HTTP header with noindex, but that way I have it in the robots.txt file as well.”

Mueller also had this to say about the file size:

“The size comes from testing the various robots.txt testing tools my team and I have worked on. The RFC says that a crawler should parse at least 500 kibibytes (the first person likes bonus which explains what kind of snack it is.) You have to stop somewhere, you can make pages infinitely long (and I have, and many people have, some even on purpose). In practice, what happens is that the system that checks the robots.txt file (the parser) will make a cut somewhere.”

He also said he added a disclaimer at the top of this section in hopes that it would be picked up as a “blanket disclaimer”, but I’m not sure which disclaimer he’s talking about. Your robots.txt file has exactly 22,433 bans.

He wrote:

“I added a “disallow: /” at the top of this section, so I hope it’s picked up as a general ban. It’s possible that the parser will cut off in an awkward place, like a line that has “allow : /cheeseisbest” and stops right at the “/”, which would deadlock the parser (and, funnily enough!, the allow rule). It will override if you have “allow: /” and “no allow: /”). This, however, seems highly unlikely.”

And there it is. John Mueller’s strange robots.txt.

Robots.txt visible here:

[ad_2]

Source link