OpenAI published a response to The New York Times lawsuit alleging that The NYTimes used manipulative inducement techniques in order to induce ChatGPT to regurgitate long snippets, stating that the lawsuit is based on misuse of ChatGPT by in order to “cherry pick” examples for the lawsuit.

The New York Times lawsuit against OpenAI

The New York Times filed a copyright infringement lawsuit against OpenAI (and Microsoft) alleging that ChatGPT “recites Times content verbatim” among other complaints.

The lawsuit introduced evidence showing how GPT-4 could publish large amounts of New York Times content without attribution as evidence that GPT-4 infringes on New York Times content.

The allegation that GPT-4 is broadcasting exact copies of New York Times content is important because it counters OpenAI’s insistence that its use of data is transformative, which is a legal framework related to the fair use doctrine.

The The United States Copyright Office defines fair use of copyrighted content that is transformative:

“Fair use is a legal doctrine that promotes freedom of expression by allowing the unlicensed use of copyrighted works under certain circumstances.

… “transformative” uses are more likely to be considered fair. Transformative uses are those that add something new, with a further purpose or a different character, and do not replace the original use of the work.”

That’s why it’s important that The New York Times claims that OpenAI’s use of the content is not fair use.

The New York Times lawsuit against OpenAI states:

“Defendants insist that their conduct is protected as ‘fair use’ because their unlicensed use of copyrighted content to train GenAI models serves a new ‘transformative’ purpose. But there is nothing ‘transformative’ about when using The Times content… Because the outputs of Defendants’ GenAI models compete with and closely mimic the inputs used to train them, copying the Times works for this purpose is not fair use “.

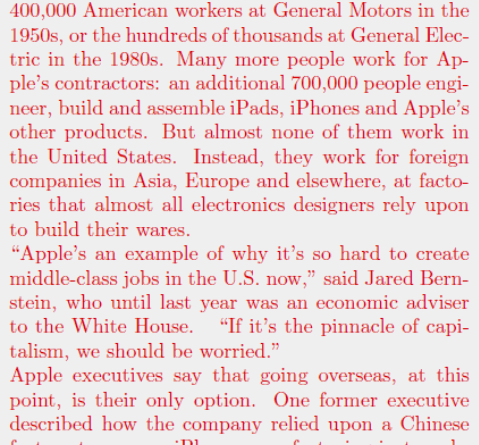

The screenshot below shows evidence of how GPT-4 produces an exact copy of the Times’ content. Content in red is original content created by the New York Times and published by GPT-4.

OpenAI’s response undermines the claims of the NYTimes lawsuit

OpenAI offered a strong rebuttal to the claims made in the New York Times lawsuit, claiming that the Times’ decision to go to court surprised OpenAI because they had assumed negotiations were moving toward a resolution.

Most importantly, OpenAI debunked The New York Times claims that GPT-4 publishes verbatim content by explaining that GPT-4 is designed not to publish verbatim content and that The New York Times used cueing techniques specifically designed to break the barriers of GPT-4 in order to produce the disputed output, undermining The New York Times’ implication that the textual content output is common GPT-4 output.

This type of warning designed to break ChatGPT to generate unwanted results is known as an adversarial indication.

Adversary Impulse Attacks

Generative AI is sensitive to the types of requests (requests) made of it, and despite the best efforts of engineers to block misuse of Generative AI, there are still new ways to use prompts to generate responses that avoid the barriers built into the technology that are designed to prevent unwanted exits.

The techniques to generate unintended results are called Adversarial Prompting and this is what OpenAI accuses the New York Times of doing in order to fabricate a basis for showing that GPT-4 use of copyrighted content is not transformative .

OpenAI’s claim that The New York Times misused GPT-4 is important because it undermines the lawsuit’s insinuation that generating copyrighted content verbatim is typical behavior.

This type of adversarial incitement also violates OpenAI Terms of Use which says:

What you can’t do

Use our Services in a manner that infringes, misappropriates or otherwise violates the rights of any person. Interfere with or disrupt our Services, including circumventing any rate limits or restrictions or circumventing any security protections or mitigations we put on our Services.

OpenAI claims lawsuit based on manipulated requests

OpenAI’s rebuttal claims that the New York Times used manipulated prompts specifically designed to subvert GPT-4 rails to generate textual content.

OpenAI writes:

“It appears that they intentionally manipulated the prompts, often including long snippets of articles, to get our model to regurgitate.

Even when they use these prompts, our models don’t usually behave in the way the New York Times suggests, suggesting that they either instructed the model to regurgitate or they chose their examples from many trials.

OpenAI also responded to The New York Times’ lawsuit saying that the methods used by The New York Times to generate verbatim content were a violation of permitted user activity and misuse.

They write:

“Despite their claims, this misuse is not typical or permitted user activity.”

OpenAI ended by saying that they continue to build resistance against the kinds of fast adversary attacks used by the New York Times.

They write:

“In any case, we are continually making our systems more resilient to adversary attacks to regurgitate training data, and we have already made great progress in our recent models.”

OpenAI supported its copyright due diligence claim by citing its July 2023 response to reports that ChatGPT was generating textual responses.

We’ve learned that ChatGPT’s “Navigation” beta may occasionally display content in ways we don’t want, for example, if a user specifically requests the full text of a URL, they may inadvertently fulfill that request. We’re turning off browsing while we fix this – we want to make it right for content owners.

— OpenAI (@OpenAI) July 4, 2023

The New York Times versus OpenAI

There are always two sides to a story, and OpenAI has just published its side showing that the New York Times claims are based on adversarial attacks and misuse of ChatGPT to elicit textual responses.

Read OpenAI’s response:

OpenAI and journalism:

We support journalism, we partner with news organizations, and we believe the New York Times lawsuit is without merit.

Featured image by Shutterstock/pizzastereo

[ad_2]

Source link