The researchers tested the idea that an AI model can have an advantage in self-detection of its own content because the detection leveraged the same training and datasets. What they didn’t expect to find was that of the three AI models they tested, the content generated by one of them was so undetectable that even the AI that generated it couldn’t detect it.

The study was conducted by researchers from the Department of Computer Science at the Lyle School of Engineering at Southern Methodist University.

AI Content Detection

Many AI detectors are trained to look for the telltale signs of AI-generated content. These signals are called “artifacts” that are generated due to the underlying transformer technology. But other artifacts are unique to each base model (the big language model on which the AI is based).

These artifacts are unique to each AI and arise from the distinctive training data and tuning that is always different from one AI model to another.

The researchers discovered evidence that this uniqueness is what allows an AI to be more successful at identifying its own content, much better than trying to identify content generated by a different AI.

Bard has a higher chance of identifying Bard-generated content and ChatGPT has a higher success rate of identifying ChatGPT-generated content, but…

The researchers found that this was not true for the content generated by Claude. Claude had a hard time spotting the content he was generating. The researchers shared an idea as to why Claude was unable to detect its own content and this article discusses this further.

This is the idea behind research tests:

“Because each model can be trained differently, it is difficult to create a detector tool to detect the artifacts created by all possible generative AI tools.

Here, we develop a different approach called self-detection, where we use the generative model itself to detect its own artifacts to distinguish its own generated text from human-written text.

This would have the advantage that we do not need to learn how to detect all generative AI models, but only need access to one generative AI model for detection.

This is a huge advantage in a world where new models are continuously being developed and trained.”

Methodology

The researchers tested three AI models:

ChatGPT-3.5 by OpenAI Bard by Google Claude by Anthropic

All models used were September 2023 versions.

A dataset of fifty different subjects was created. Each AI model was given exactly the same instructions to create essays of about 250 words for each of the fifty topics which generated fifty essays for each of the three AI models.

Each AI model was then identically asked to paraphrase its own content and generate an additional essay that was a rewrite of each original essay.

They also collected fifty human-generated essays on each of the fifty topics. All human-generated essays were selected from the BBC.

The researchers then used a zero-shot cue to self-detect the AI-generated content.

Zero-shot cueing is a type of cueing that relies on the ability of AI models to complete tasks for which they have not been specifically trained.

The researchers further explained their methodology:

“We created a new instance of each AI system started and posed with a specific query: ‘Whether the following text matches your writing pattern and word choice.'” The procedure is

this is repeated for the original, paraphrased, and human trials, and the results are noted.

We have also added the result of the ZeroGPT AI detection tool. We do not use this result to compare performance, but as a benchmark to show how difficult the detection task is.”

They also noted that a 50% accuracy rate is equivalent to guessing that can essentially be considered a level of accuracy that is a failure.

Results: Self-detection

It should be noted that the researchers acknowledged that their sampling rate was low and said they were not claiming the results to be definitive.

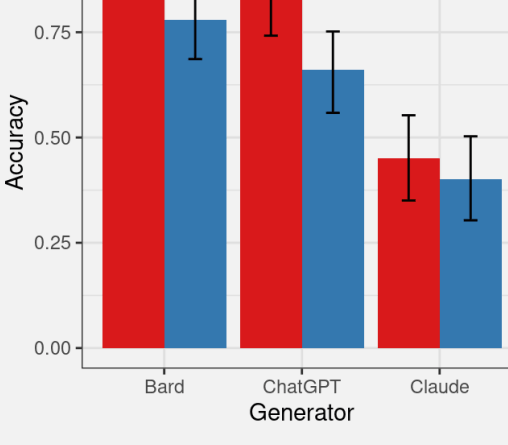

Below is a chart showing AI self-detection success rates from the first batch of trials. Red values represent self-detection of AI and blue represents performance of the ZeroGPT AI detection tool.

Results of AI self-detection of the text content itself

Bard did pretty well at detecting its own content, and ChatGPT also performed well at detecting its own content.

ZeroGPT, the AI detection tool, detected Bard content very well and performed slightly better in detecting ChatGPT content.

ZeroGPT essentially failed to detect the content generated by Claude, performing worse than the 50% threshold.

Claude was the fringe of the pack because it was unable to detect its own content, performing significantly worse than Bard and ChatGPT.

The researchers hypothesized that Claude’s output may contain less detectable artifacts, which explains why both Claude and ZeroGPT failed to detect Claude’s AI-generated trials.

So while Claude was unable to reliably detect his own content, this turned out to be a sign that Claude’s output was of higher quality in terms of producing fewer AI artifacts.

ZeroGPT performed better at detecting Bard-generated content than it did at detecting ChatGPT and Claude content. The researchers hypothesized that it could be that Bard generates more detectable artifacts, making Bard easier to detect.

So in terms of self-detect content, Bard may be generating more detectable artifacts and Claude is generating fewer artifacts.

Results: Autodetection of paraphrased content

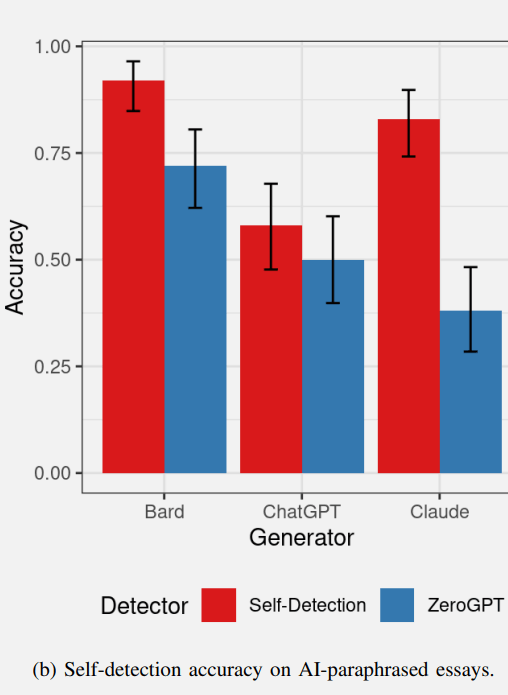

The researchers hypothesized that AI models would be able to self-detect their own paraphrased text because artifacts that are created by the model (as detected in the original essays) should also be present in the rewritten text.

However, the researchers acknowledged that the prompts for writing the text and paraphrasing are different because each rewrite is different from the original text, which could lead to different self-detection results for the self-detection of paraphrased text.

The results of the self-detection of the paraphrased text were indeed different from the self-detection of the original essay test.

Bard was able to auto-detect paraphrased content at a similar rate. ChatGPT was unable to auto-detect paraphrased content at a much higher rate than the 50% rate (which is equal to guessing). ZeroGPT’s performance was similar to the previous test results, with slightly worse performance.

Perhaps the most interesting result was given by Claude d’Anthropic.

Claude was able to detect the paraphrased content (but was not able to detect the original essay in the previous test).

It’s an interesting result that Claude’s original tests apparently had so few artifacts to indicate that AI was generated that even Claude couldn’t detect it.

However, it was able to auto-detect the paraphrase while ZeroGPT could not.

The researchers commented on this test:

“The finding that the paraphrase prevents ChatGPT from self-detecting while increasing Claude’s ability to self-detect is very interesting and may be the result of the inner workings of these two transformer models.”

Screenshot of auto-detection of paraphrased content with AI

These tests produced almost unpredictable results, especially for Anthropic’s Claude and this trend continued with the test of how well the AI models detected each other’s content, which had an interesting wrinkle.

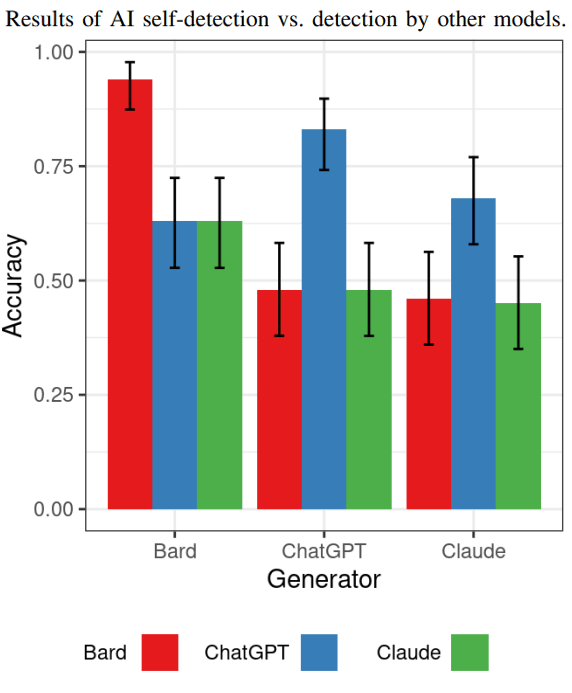

Results: AI models that detect each other’s content

The following test showed how well each AI model detected content generated by the other AI models.

If it is true that Bard generates more artifacts than the other models, will the other models be able to easily detect the content generated by Bard?

The results show that yes, the content generated by Bard is the easiest to detect by the other AI models.

Regarding the detection of ChatGPT-generated content, both Claude and Bard failed to detect it as AI-generated (just as Claude failed to detect it).

ChatGPT was able to detect content generated by Claude at a higher rate than Bard and Claude, but this higher rate wasn’t much better than guessing.

The finding here is that they weren’t all that good at detecting other people’s content, which the researchers said may show that self-detection was a promising area of study.

Here is the chart showing the results of this specific test:

At this point, it should be noted that the researchers do not claim that these results are conclusive about AI detection in general. The goal of the research was to test to see if AI models could be successful in self-detection of generated content. The answer is mostly yes, they do a better job of self-detection, but the results are similar to what was found with ZEROGpt.

The researchers commented:

“Self-detection shows similar detection power compared to ZeroGPT, but note that the purpose of this study is not to claim that self-detection is superior to other methods, which would require a large study to compare with many state-of-the-art AI content detection tools. Here, we only investigate the basic self-detection capability of the models.”

Conclusions and conclusions

The test results confirm that detecting AI-generated content is not an easy task. Bard is able to detect its own content and paraphrased content.

ChatGPT can detect its own content, but performs less well with its paraphrased content.

Claude is the standout because it is not able to reliably auto-detect its own content, but it was able to detect paraphrased content, which was a bit strange and unexpected.

Detecting Claude’s original essays and paraphrased essays was a challenge for ZeroGPT and the other AI models.

The researchers noted about Claude’s results:

“This seemingly inconclusive result needs further consideration as it is driven by two combined causes.

1) The model’s ability to create text with very few detectable artifacts. Since the goal of these systems is to generate human-like text, fewer artifacts that are harder to detect means the model is closer to that goal.

2) The inherent capability of the self-detection model can be affected by the architecture used, the indicator and the tuning applied.

The researchers made this additional observation about Claude:

“Only Claude is undetectable. This indicates that Claude might produce fewer detectable artifacts than the other models.

The self-detect detection rate follows the same trend, indicating that Claude creates text with fewer artifacts, making it harder to distinguish from human handwriting.”

But of course, the strange part is that Claude was also unable to detect his own original content, unlike the other two models which had a higher success rate.

The researchers indicated that self-detection remains an interesting area for continued research and suggest that further studies could focus on larger datasets with greater diversity of AI-generated text, test additional AI models, a comparison with more AI detectors and finally suggested to study. how rapid engineering can influence detection levels.

Read the original research paper and abstract here:

AI content self-detection for large transformer-based language models

Featured image by Shutterstock/SObeR 9426

[ad_2]

Source link