Many are aware of the popular Chain of Thought (CoT) method for driving generative AI to get better and more sophisticated answers. Researchers at Google DeepMind and Princeton University developed an improved prompting strategy called Tree of Thoughts (ToT) that takes prompting to a higher level of results, unlocking more sophisticated reasoning methods and better results.

The researchers explain:

“We show how deliberate search in trees of thought (ToT) produces better results and, more importantly, interesting and promising new ways of using language models to solve problems that require searching or planning.”

The researchers compared three types of indications

The research paper compares ToT with three other boost strategies.

1. Input-output (IO) indication.

This is basically giving the language model a problem to solve and getting the answer.

An example based on the text summary is:

Entry Request: Summarize the article below.

Output Request: Summary based on the article entered

2. Chain of thought

This form of prompting is where a language model is guided to generate coherent and connected responses by encouraging it to follow a logical sequence of thoughts. The Chain of Thought (CoT) prompt is a way to guide a language model through the intermediate steps of reasoning to solve problems.

Example of the chain of thought:

Question: Roger has 5 tennis balls. Buy 2 more cans of tennis balls. Each can has 3 tennis balls. How many tennis balls does he have now?

Reasoning: Roger started with 5 balls. 2 tins of 3 tennis balls each is 6 tennis balls. 5 + 6 = 11. The answer: 11

Question: The cafeteria had 23 apples. If they used 20 for lunch and bought 6 more, how many apples do they have?

3. Self-consistency with CoT

Simply put, this is a prompting strategy of asking the language model multiple times and then choosing the most frequent answer.

The research project on Sel-consistency with CoT from March 2023 explains:

“It first samples a diverse set of reasoning paths rather than taking only the greedy one, and then selects the most consistent answer by marginalizing the sampled reasoning paths. Self-consistency takes advantage of the intuition that a complex reasoning problem typically supports multiple different ways of think they lead to their only correct answer.”

Dual process models in human cognition

Researchers are inspired by a theory of how human decision making is called dual process models of human cognition or dual process theory.

Dual-process models of human cognition propose that humans engage in two types of decision-making processes, one that is intuitive and fast and one that is more deliberative and slower.

Fast, automatic, unconscious

This mode involves fast, automatic, unconscious thinking that is often said to be based on intuition. Slow, Deliberate, Conscious

This mode of decision making is a slow, deliberate and conscious thought process that involves careful consideration, analysis and step-by-step reasoning before reaching a final decision.

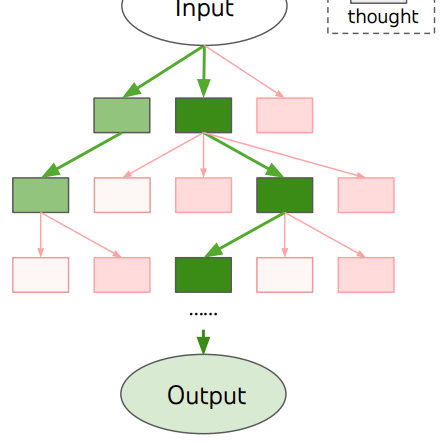

The Tree of Thought (ToT) prompt framework uses a tree structure of each step of the reasoning process that allows the language model to evaluate each step of reasoning and decide whether that step of reasoning is feasible or not and lead to an answer. If the language model decides that the reasoning path will not lead to an answer, the request strategy forces it to abandon that path (or branch) and keep moving forward with another branch, until it reaches the final result.

Tree of Thought (ToT) vs Chain of Thought (CoT)

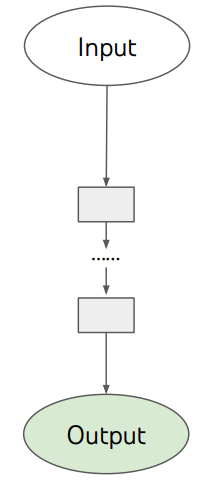

The difference between ToT and CoT is that ToT has a tree-and-branch framework for the reasoning process, while CoT takes a more linear path.

In simple terms, CoT tells the language model to follow a series of steps to perform a task, which resembles the cognitive model of System 1 which is fast and automatic.

ToT resembles the cognitive model of system 2 which is more deliberative and tells the language model to follow a series of steps but also have an evaluator step in and review each step and if it’s a good step to move on and, if not, stop and continue. another way

Illustrations of targeting strategies

The research paper published schematic illustrations of each prompting strategy, with rectangular boxes representing a “thought” within each step to complete the task, solve a problem.

Below is a screenshot of what ToT’s reasoning process looks like:

Illustration of the chain of everything and indicated

Here is the schematic illustration of CoT, showing how the thought process is more of a straight (linear) path:

The research paper explains:

“Research on human problem solving suggests that people search through a combinatorial problem space: a tree where nodes represent partial solutions and branches correspond to operators.

that modify them. Which branch to take is determined by heuristics that help navigate the problem space and guide the problem solver to a solution.

This perspective highlights two key shortcomings of existing approaches that use LM to solve general problems:

1) Locally, they do not explore different continua within a thought process – the branches of the tree.

2) Overall, they do not incorporate any kind of planning, anticipation, or backtracking to help evaluate these different options, the kind of heuristic-driven search that seems characteristic of human problem solving.

To address these shortcomings, we introduce the Tree of Thought (ToT), a paradigm that allows LMs to explore multiple paths of reasoning about thoughts…

Tested with a math game

The researchers tested the method using a math game called Game of 24. Game of 24 is a mathematical card game where players use four numbers (which can only be used once) from a set of cards to match them using basic arithmetic (addition, subtraction, multiplication and division) to get a result of 24.

Results and conclusions

The researchers tested the ToT request strategy against the other three approaches and found that it produced consistently better results.

However, they also point out that ToT may not be necessary to complete tasks that GPT-4 already does well.

They conclude:

“The associative ‘System 1’ of LMs can be beneficially augmented with a ‘System 2’ based on searching a tree of possible paths to solving a problem.

The Tree of Thoughts framework provides a way to translate classical knowledge about problem solving into actionable methods for contemporary LMs.

At the same time, LMs address a weakness of these classical methods, providing a way to solve complex problems that are not easily formalized, such as creativity.

writing

We see this intersection of LM with classical AI approaches as an exciting direction.”

Read the original research paper:

Tree of Thoughts: Deliberate Problem Solving with Large Linguistic Models

[ad_2]

Source link